NE LAISSER PAS LE 5G DETRUIRE VOTRE ADN Protéger toute votre famille avec les appareils Quantiques Orgo-Life® Publicité par Adpathway

The U.S. Food and Drug Administration (FDA) made a calculated move into the AI age this week with the official launch of Elsa, a generative AI tool built to assist—but not replace—its workforce. Developed in a secure GovCloud environment and isolated from industry-submitted data, Elsa is already being used to summarize adverse event reports, compare drug labels, generate code for internal use, and assist with inspection prioritization.

Elsa is not a decision-maker. It does not approve drugs, draft regulations, or render verdicts on public health risks. It is a language model built to assist FDA personnel in routine, document-heavy tasks where speed and consistency can make meaningful differences—particularly in areas like signal detection, nonclinical workflow support, and literature synthesis.

FDA Commissioner Dr. Marty Makary announced that the rollout came in ahead of schedule and under budget, following a successful pilot earlier this spring. In an era where government digital transformations tend to come with ballooning costs and endless delays, that’s a noteworthy development.

Yet with all innovation, especially when it affects regulatory systems, comes a need for thoughtful scrutiny. While Elsa’s current scope is narrow, its existence raises questions not just for the FDA, but for the entire Department of Health and Human Services and the broader federal ecosystem.

Crucially, Elsa’s outputs must be reviewed, verified, and confirmed by FDA staff. It operates entirely within agency infrastructure and is explicitly forbidden from making any regulatory determinations or initiating enforcement.

This distinction—between supporting workflows versus directing policy—is not trivial. FDA staff remain fully responsible for interpreting data, forming conclusions, and taking official positions. Elsa may draft, summarize, and structure, but the judgment remains human. This separation is the single most important safeguard against the inappropriate delegation of authority to machines.

It’s also why Elsa stands apart from earlier, more controversial uses of automation in the public sector. Past efforts in predictive policing, algorithmic sentencing, and even automated benefits denial have revealed the dangers of opaque AI systems making consequential decisions without human oversight. The FDA’s approach appears to reject that model entirely, at least for now.

The FDA is not alone. Across the federal government, other agencies are experimenting with similar AI integration—but under similarly constrained and supervised use cases:

The Centers for Medicare & Medicaid Services (CMS) is piloting LLMs to assist in claim pattern recognition and policy document drafting, while ensuring all outputs are reviewed by analysts.

The Department of Veterans Affairs (VA) has tested natural language tools to help structure mental health screening notes—again, always with clinician review.

The Department of Energy (DOE) is using generative models to help draft grant summaries and technical abstracts.

The IRS is rumored to be testing narrow AI models to assist with document classification and legal language harmonization—not audit selection.

The NIH’s Office of Portfolio Analysis has explored using LLMs to help parse funding overlaps and literature trends, under human verification.

In all of these efforts, including Elsa, the pattern is the same: information augmentation, not decision automation.

In a press release, FDA Chief AI Officer Jeremy Walsh said:

“Today marks the dawn of the AI era at the FDA with the release of Elsa, AI is no longer a distant promise but a dynamic force enhancing and optimizing the performance and potential of every employee. As we learn how employees are using the tool, our development team will be able to add capabilities and grow with the needs of employees and the agency.”

On X, FDA shared:

“Today, the FDA launched Elsa, a generative AI tool designed to help employees—from scientific reviewers to investigators—work more efficiently. This innovative tool modernizes agency functions and leverages AI capabilities to better serve the American people.

Elsa in Action

➤ Accelerate clinical protocol reviews

➤ Shorten the time needed for scientific evaluations

➤ Identify high-priority inspections targets

➤ Perform faster label comparisons

➤ Summarize adverse events to support safety profile assessments

➤ Generate code to help develop databases for nonclinical applications.”

We can assume that FDA has taken a cautious approach, there are several forward-looking issues that merit public attention:

Messaging

Enthusiasm over AI is fine, but “every employee” using AI will raise an eyebrow.

How will Elsa shape regulatory narratives over time?

If the model introduces even slight framing biases in summaries, could that affect how reviewers interpret findings or draft safety communications? Are there procedures in place to identify and mitigate this?How will Elsa evolve?

Will it be re-trained periodically? How will updates be logged and tracked? If performance drifts or outputs change, will that be observable to users or reviewers?Will other AI models be introduced without equivalent oversight?

Elsa is internally managed. But future budget pressures or cross-agency “efficiency drives” may encourage procurement of third-party or open-source models with less transparent architectures.What safeguards will HHS adopt as use expands?

As Elsa’s functionality expands, or as AI is deployed across the CDC, NIH, and other HHS divisions, will consistent oversight standards be adopted? Will there be a shared ethics framework? A formal inter-agency audit group?Can AI-generated content be subject to FOIA or litigation discovery?

If Elsa drafts part of a communication or risk summary that later becomes the subject of litigation, will courts compel its disclosure? Will internal logs be preserved and accessible?What instructions are FDA staff given on allowed uses?

Internal guidelines will almost certainly govern when and how Elsa can be used. But are those guidelines codified? Auditable? What mechanisms exist to ensure they are followed?

Finally, Elsa’s rollout raises subtle but important questions about the future structure of FDA work itself. Will time saved on documentation and review synthesis be reallocated toward deeper evaluation and stakeholder engagement? Will staff need new competencies in prompt design, model interpretation, or AI validation? These changes are neither disruptive nor dystopian—but they are real, and they deserve conscious planning.

The FDA’s deployment of Elsa is not revolutionary—it is iterative. It does not transform government. It improves process. The agency deserves credit for prioritizing human oversight, building in secure environments, and avoiding the temptation to outsource sensitive functions to black-box vendors.

Elsa, if managed correctly, can make government more efficient without eroding trust. But that outcome depends not on the tool itself, but on the continued vigilance of the people using it.

Popular Rationalism will continue monitoring this space—and we encourage the public, media, and policymakers to do the same.

What you think? Is this a welcome development, or will we see problems that have to be managed? We want to know. Here’s a PopRat poll; feel free to leave a comment with your opinion!

Thanks for reading Popular Rationalism! This post is public so feel free to share it.

.png) 1 month_ago

11

1 month_ago

11

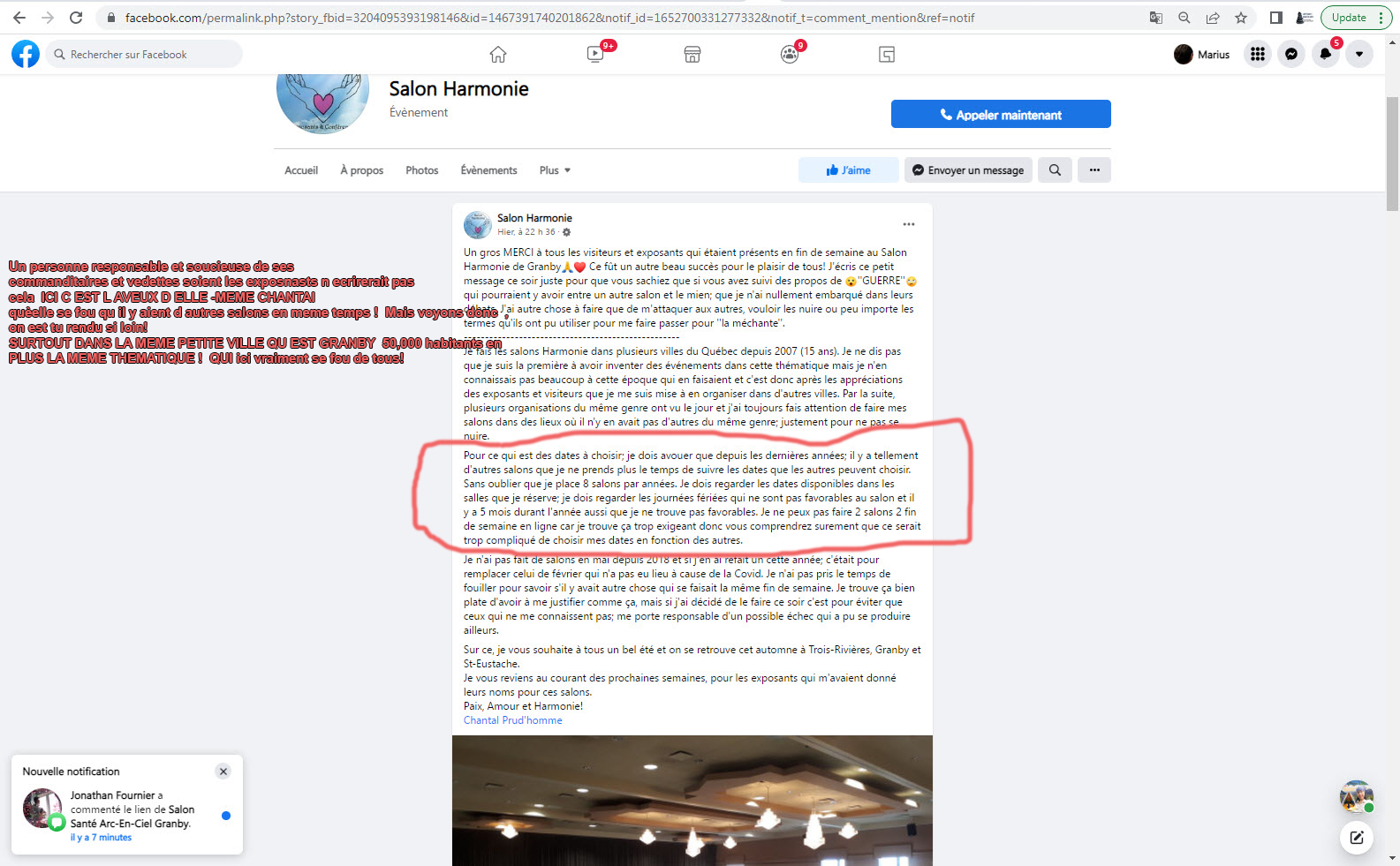

French (CA)

French (CA)